Kubernetes HA Ubuntu 24.04 Commands

Kubernetes HA Environment Setup Commands

HAProxy Load Balancer CommandsSet Hostname & SID on each Nodes hostname sudo hostnamectl set-hostname master1 sudo hostnamectl set-hostname master2 sudo hostnamectl set-hostname master3 sudo hostnamectl set-hostname worker1 sudo hostnamectl set-hostname worker2 sudo hostnamectl set-hostname LoadBalancer sudo nano /etc/hosts sudo rm /etc/machine-id sudo systemd-machine-id-setup hostnamectl | grep "Machine ID"Router VM IP Reserve master1 192.168.1.21 00:0c:29:75:4f:91 master2 192.168.1.22 00:50:56:2B:38:82 master3 192.168.1.23 00:50:56:38:25:54 LoadBalancer 192.168.1.24 00:0c:29:02:f7:11 worker1 192.168.1.25 00:0C:29:BB:E5:F4 worker2 192.168.1.26 00:0c:29:55:b4:0b

sudo apt-get update && sudo apt-get upgrade -y sudo apt-get install haproxy -y sudo nano /etc/haproxy/haproxy.cfg frontend kubernetes-api bind *:6443 mode tcp option tcplog default_backend kube-master-nodes backend kube-master-nodes mode tcp balance roundrobin option tcp-check default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100 server master1 192.168.1.21:6443 check server master2 192.168.1.22:6443 check server master3 192.168.1.23:6443 check sudo systemctl restart haproxy sudo systemctl enable haproxy nc -v localhost 6443 sudo journalctl -u haproxy -fComman Commands for Control Plane & Data Plane

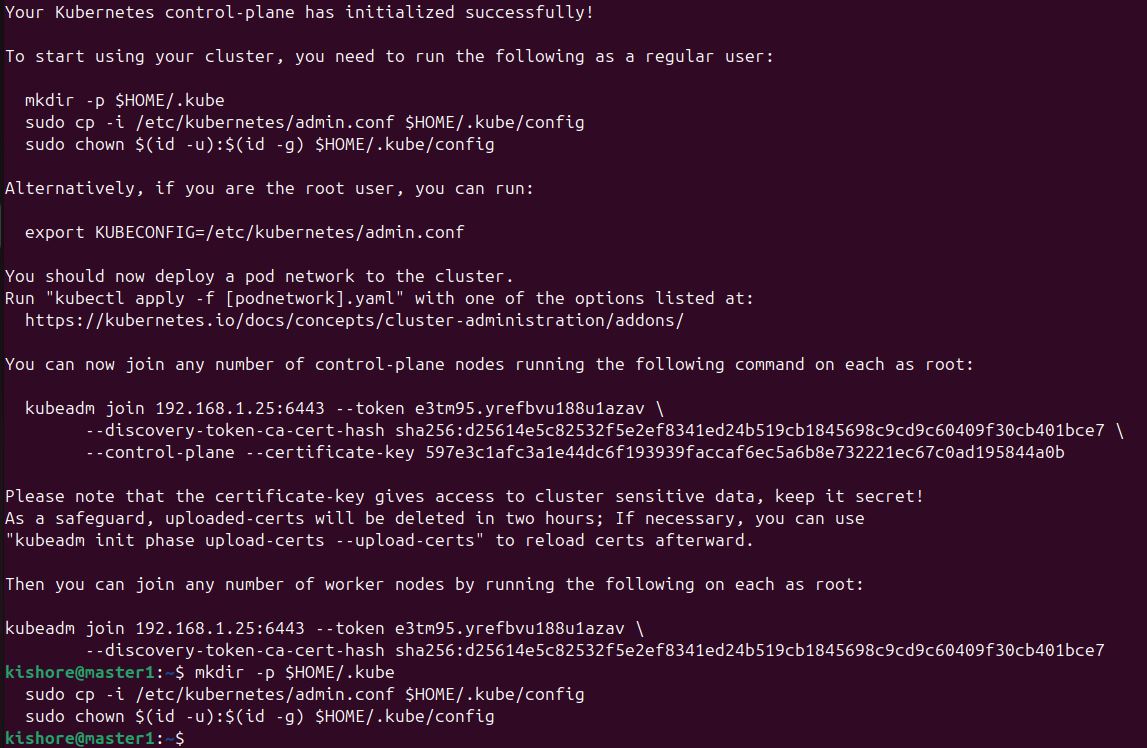

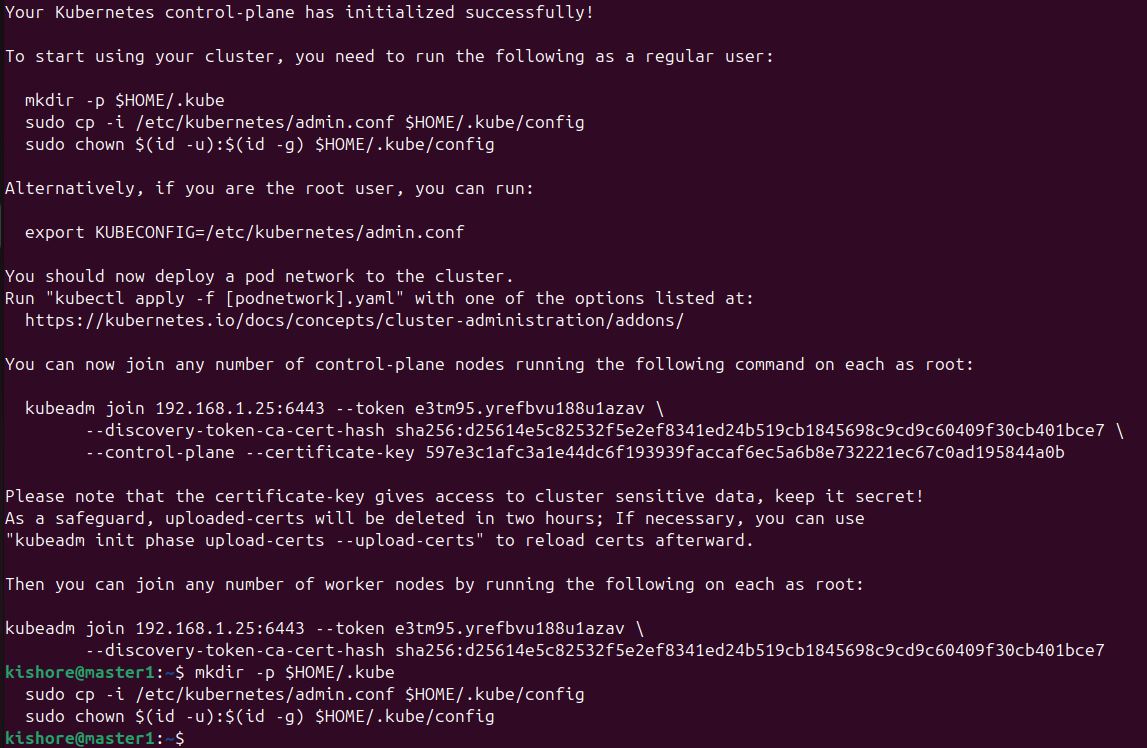

1.Install Curl and Above System Host Name & SID Change Commands sudo apt install curl2.Disable SWAP sudo swapoff -a sudo sed -i '/swap.img/s/^/#/' /etc/fstab3.Forwarding IPv4 and letting iptables see bridged traffic cat<< EOF | sudo tee /etc/modules-load.d/k8s.conf overlay br_netfilter EOF sudo modprobe overlay sudo modprobe br_netfilter # sysctl params required by setup, params persist across reboots cat<< EOF | sudo tee /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.ipv4.ip_forward = 1 EOF # Apply sysctl params without reboot sudo sysctl --system # Verify that the br_netfilter, overlay modules are loaded by running the following commands: lsmod | grep br_netfilter lsmod | grep overlay # Verify that the net.bridge.bridge-nf-call-iptables, net.bridge.bridge-nf-call-ip6tables, and net.ipv4.ip_forward system variables are set to 1 in your sysctl config by running the following command: sysctl net.bridge.bridge-nf-call-iptables net.bridge.bridge-nf-call-ip6tables net.ipv4.ip_forward4.Install container runtime curl -LO https://github.com/containerd/containerd/releases/download/v1.7.14/containerd-1.7.14-linux-amd64.tar.gz sudo tar Cxzvf /usr/local containerd-1.7.14-linux-amd64.tar.gz curl -LO https://raw.githubusercontent.com/containerd/containerd/main/containerd.service sudo mkdir -p /usr/local/lib/systemd/system/ sudo mv containerd.service /usr/local/lib/systemd/system/ sudo mkdir -p /etc/containerd containerd config default | sudo tee /etc/containerd/config.toml sudo sed -i 's/SystemdCgroup \= false/SystemdCgroup \= true/g' /etc/containerd/config.toml sudo systemctl daemon-reload sudo systemctl enable --now containerd # Check that containerd service is up and running systemctl status containerd5.Install runc curl -LO https://github.com/opencontainers/runc/releases/download/v1.1.12/runc.amd64 sudo install -m 755 runc.amd64 /usr/local/sbin/runc6.Install cni plugin curl -LO https://github.com/containernetworking/plugins/releases/download/v1.5.0/cni-plugins-linux-amd64-v1.5.0.tgz sudo mkdir -p /opt/cni/bin sudo tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.5.0.tgz7.Install kubeadm, kubelet and kubectl sudo apt-get update sudo apt-get install -y apt-transport-https ca-certificates curl gpg sudo mkdir -p /etc/apt/keyrings curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.33/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.33/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list sudo apt-get update sudo apt-get install -y kubelet=1.33.0-1.1 kubeadm=1.33.0-1.1 kubectl=1.33.0-1.1 --allow-downgrades --allow-change-held-packages sudo apt-mark hold kubelet kubeadm kubectl kubeadm version kubelet --version kubectl version --clientsystemctl status kubelet journelctl -u kubelet 8.Only for Control Plane Command & For Data Plane use Join command sudo kubeadm init --control-plane-endpoint "192.168.1.24:6443" --upload-certs --pod-network-cidr=192.168.0.0/16 --apiserver-advertise-address=192.168.1.21Note: Copy the copy to the notepad that was generated after the init command completion, we will use that later. 192.168.1.24 - Load Balancer IP 192.168.1.21 - Control Plane Leader Ip(Which is this Local IP for now) sudo kubeadm init phase upload-certs --upload-certs sudo kubeadm join 192.168.1.24:6443 --token 0petjt.y7zn90gph4tjkqbo \ --discovery-token-ca-cert-hash sha256:2067a10427fbb83fce3121a8c119612c722f30661ad23bcfeaec44e02ed71fa2 \ --control-plane --certificate-key 07638a04144fce4f22d437fa4c8aa9e34a737c4f3fabf08c1c7bd57a21113e59 sudo kubeadm join 192.168.1.24:6443 --token 0petjt.y7zn90gph4tjkqbo \ --discovery-token-ca-cert-hash sha256:2067a10427fbb83fce3121a8c119612c722f30661ad23bcfeaec44e02ed71fa2 9.Prepare kubeconfig mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config10.Install calico kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.28.0/manifests/tigera-operator.yaml curl https://raw.githubusercontent.com/projectcalico/calico/v3.28.0/manifests/custom-resources.yaml -O kubectl apply -f custom-resources.yaml

Management Commands

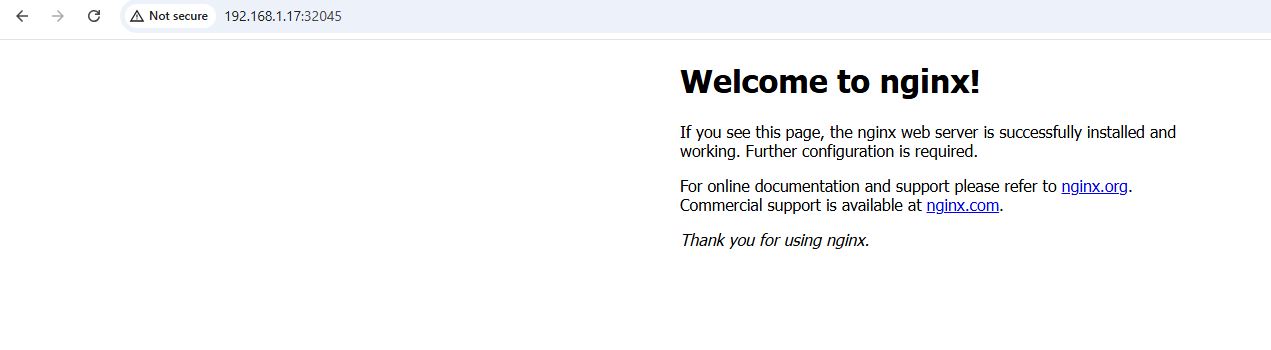

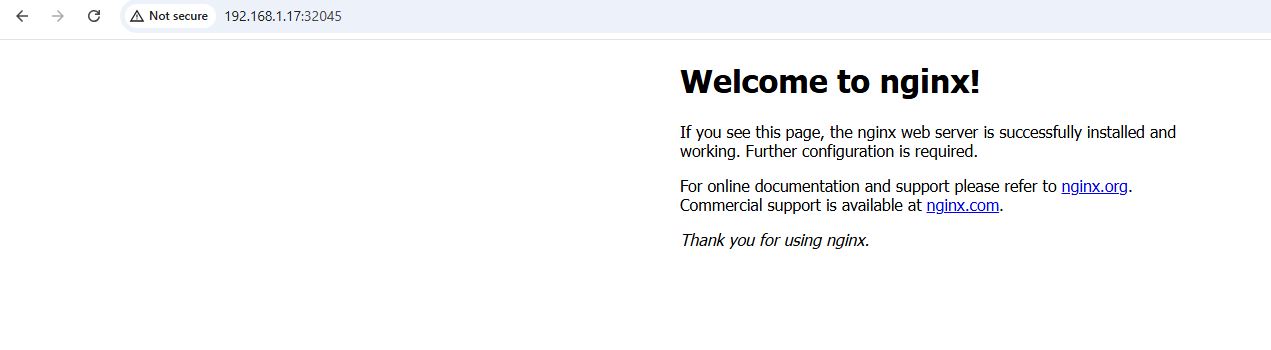

HA Proxy Real Time Logs sudo journalctl -u haproxy -fIn Master Check Who Owns Leader Postion for scheduler && controller-manager kubectl get lease -n kube-system | grep scheduler kubectl get lease -n kube-system | grep controller-manager kubectl describe lease kube-scheduler -n kube-system kubectl describe lease kube-controller-manager -n kube-systemContainer Creation kubectl run nginx123 --image=nginx --port=80 kubectl expose pod nginx123 --type=NodePort --port=80 --target-port=80 --name=nginx-service kubectl get svc -o wide

Ideas

Active Passive -EC2 etcd cluster metadata desired state ->kubectl mini server ec2 -> Onpremise down -> etcd kube cluster deploy - application CI/CD On premise - Jenkins - cloud container deploy public Bot/service onpremise container -> application/webapp check -> Jenkins/CICD ec2 remap autodeploy container -> DNS godaddy api remap Active Passive -EKS question for AI Upcoming Ideas: HA -> DR

Deployment Images

Control Plane & Worker Nodes Join Commands:

Nginx POD: