Kubernetes ELK Stack Commands

Kubernetes ELK Environment Setup Commands

Directory Creation for Persistent Volumes Local Path for Elasticsearch

Execute in Data Plane where Elastic Search want to RUN

Create Elasticsearch ConfigMap

Create Logstash ConfigMap

Create Kibana ConfigMap

Set Hostname & SID on each Nodes hostname sudo hostnamectl set-hostname control sudo hostnamectl set-hostname data1 sudo hostnamectl set-hostname data2 sudo nano /etc/hosts sudo rm /etc/machine-id sudo systemd-machine-id-setup hostnamectl | grep "Machine ID"Router VM IP Reserve control 192.168.1.31 data1 192.168.1.32 data2 192.168.1.33

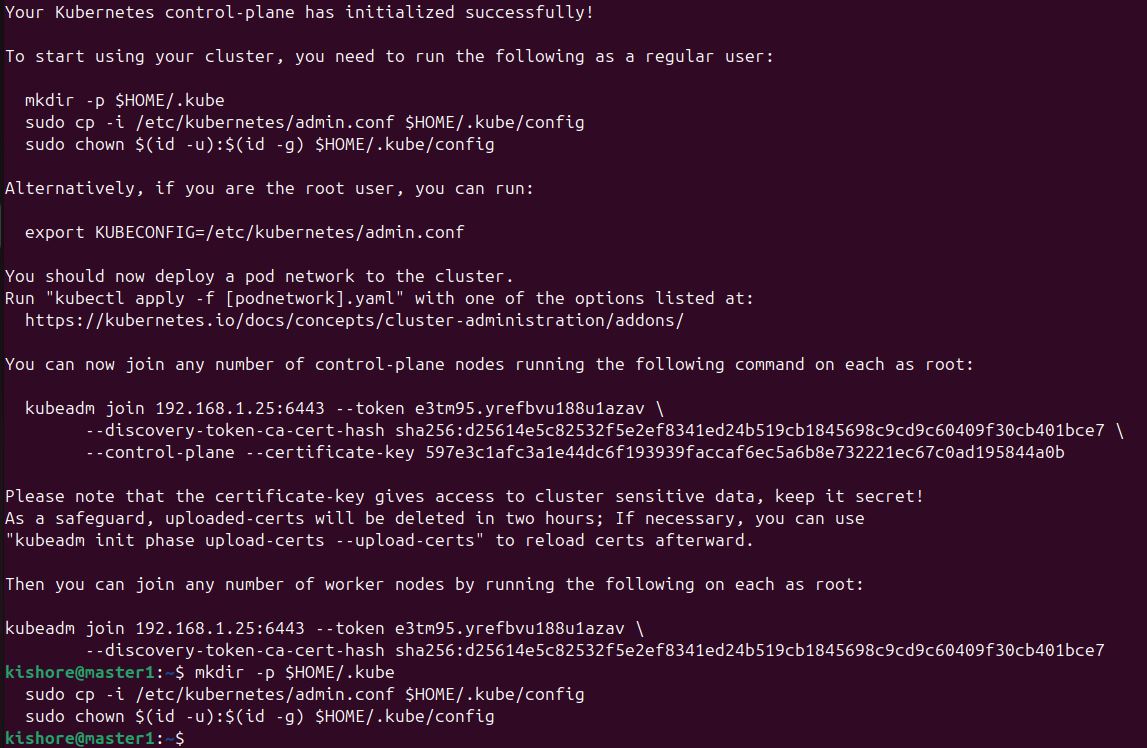

Phase 1: Prepare Kubernetes Environment

Directory Creation for Persistent Volumes Local Path for Elasticsearch

# Create directories on your Ubuntu Elasticsearch Deployment Node(If Multi Nodes use this on Multiple VMs) sudo mkdir -pCreate Namespace/opt/elasticsearch/data sudo chmod 777/opt/elasticsearch/data

kubectl create namespaceCreate Storage Classeselk-stack

catCreate Persistent Volumes for Elasticsearch<< EOF | kubectl apply -f - apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name:local-storage namespace:elk-stack provisioner: kubernetes.io/no-provisioner volumeBindingMode: WaitForFirstConsumer EOF

cat<< EOF | kubectl apply -f - apiVersion: v1 kind: PersistentVolume metadata: name:elasticsearch-pvc namespace:elk-stack spec: capacity: storage:10Gi accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Retain storageClassName:local-storage local: path:/opt/elasticsearch/data nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/hostname operator: In values: -data1 # Replace with your actual node name EOF

Phase 2: Deploy Elasticsearch

Create Elasticsearch ConfigMap

catDeploy Elasticsearch<< EOF | kubectl apply -f - apiVersion: v1 kind: ConfigMap metadata: name:elasticsearch-config namespace:elk-stack data: elasticsearch.yml: | cluster.name: "docker-cluster" network.host: 0.0.0.0 discovery.type: single-node xpack.security.enabled: false xpack.monitoring.collection.enabled: true EOF

cat<< EOF | kubectl apply -f - apiVersion: apps/v1 kind: Deployment metadata: name:elasticsearch namespace:elk-stack spec: replicas:1 selector: matchLabels: app:elasticsearch template: metadata: labels: app:elasticsearch spec: containers: - name:elasticsearch image: docker.elastic.co/elasticsearch/elasticsearch:8.11.0 ports: - containerPort: 9200 - containerPort: 9300 env: - name: discovery.type value:single-node - name: ES_JAVA_OPTS value: "-Xms512m -Xmx512m" - name: xpack.security.enabled value: "false" volumeMounts: - name: elasticsearch-data mountPath: /usr/share/elasticsearch/data - name: elasticsearch-config mountPath: /usr/share/elasticsearch/config/elasticsearch.yml subPath: elasticsearch.yml resources: limits: memory: "1Gi" cpu: "1000m" requests: memory: "512Mi" cpu: "500m" volumes: - name: elasticsearch-data persistentVolumeClaim: claimName:elasticsearch-pvc - name: elasticsearch-config configMap: name: elasticsearch-config --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name:elasticsearch-pvc namespace:elk-stack spec: accessModes: - ReadWriteOnce resources: requests: storage:10Gi storageClassName:local-storage --- apiVersion: v1 kind: Service metadata: name:elasticsearch namespace:elk-stack spec: selector: app:elasticsearch ports: - port:9200 targetPort:9200 name:http - port:9300 targetPort:9300 name:transport type:NodePort EOF

Phase 3: Deploy Logstash

Create Logstash ConfigMap

catDeploy Logstash<< EOF | kubectl apply -f - apiVersion: v1 kind: ConfigMap metadata: name: logstash-config namespace: elk-stack data: logstash.yml: | http.host: "0.0.0.0" path.config: /usr/share/logstash/pipeline xpack.monitoring.enabled: true xpack.monitoring.elasticsearch.hosts: ["http://elasticsearch:9200"] pipelines.yml: | - pipeline.id: beats-pipeline path.config: "/usr/share/logstash/pipeline/beats.conf" beats.conf: | input { beats { port => 5044 } } filter { if [fields][log_type] == "iis" { grok { match => { "message" => "%{TIMESTAMP_ISO8601:timestamp} %{IPORHOST:server_ip} %{WORD:method} %{URIPATH:uri_stem} %{NOTSPACE:uri_query} %{NUMBER:port} %{NOTSPACE:username} %{IPORHOST:client_ip} %{NOTSPACE:user_agent} %{NOTSPACE:referer} %{NUMBER:status} %{NUMBER:substatus} %{NUMBER:win32_status} %{NUMBER:time_taken}" } } date { match => [ "timestamp", "yyyy-MM-dd HH:mm:ss" ] } mutate { convert => { "status" => "integer" "time_taken" => "integer" "port" => "integer" } } if [status] >= 400 { mutate { add_tag => [ "error" ] } } } # Add more filters for other log types if needed } output { elasticsearch { hosts => ["http://elasticsearch:9200"] index => "logs-%{[fields][log_type]}-%{+YYYY.MM.dd}" } stdout { codec => rubydebug } } EOF

cat<< EOF | kubectl apply -f - apiVersion: apps/v1 kind: Deployment metadata: name: logstash namespace: elk-stack spec: replicas: 1 selector: matchLabels: app: logstash template: metadata: labels: app: logstash spec: containers: - name: logstash image: docker.elastic.co/logstash/logstash:8.11.0 ports: - containerPort: 5044 - containerPort: 9600 env: - name: LS_JAVA_OPTS value: "-Xms256m -Xmx256m" volumeMounts: - name: logstash-config mountPath: /usr/share/logstash/config/logstash.yml subPath: logstash.yml - name: logstash-config mountPath: /usr/share/logstash/config/pipelines.yml subPath: pipelines.yml - name: logstash-config mountPath: /usr/share/logstash/pipeline/beats.conf subPath: beats.conf resources: limits: memory: "512Mi" cpu: "500m" requests: memory: "256Mi" cpu: "250m" volumes: - name: logstash-config configMap: name: logstash-config --- apiVersion: v1 kind: Service metadata: name: logstash namespace: elk-stack spec: selector: app: logstash ports: - port: 5044 targetPort: 5044 name: beats - port: 9600 targetPort: 9600 name: api type: LoadBalancer # or NodePort if you don't have LoadBalancer EOF

Phase 4: Deploy Kibana

Create Kibana ConfigMap

catDeploy Kibana<< EOF | kubectl apply -f - apiVersion: v1 kind: ConfigMap metadata: name: kibana-config namespace: elk-stack data: kibana.yml: | server.host: "0.0.0.0" server.port: 5601 elasticsearch.hosts: ["http://elasticsearch:9200"] xpack.security.enabled: false xpack.encryptedSavedObjects.encryptionKey: "something_at_least_32_characters_long" EOF

catNeed to Filter this commands and use<< EOF | kubectl apply -f - apiVersion: apps/v1 kind: Deployment metadata: name: kibana namespace: elk-stack spec: replicas: 1 selector: matchLabels: app: kibana template: metadata: labels: app: kibana spec: containers: - name: kibana image: docker.elastic.co/kibana/kibana:8.11.0 ports: - containerPort: 5601 volumeMounts: - name: kibana-config mountPath: /usr/share/kibana/config/kibana.yml subPath: kibana.yml resources: limits: memory: "512Mi" cpu: "500m" requests: memory: "256Mi" cpu: "250m" volumes: - name: kibana-config configMap: name: kibana-config --- apiVersion: v1 kind: Service metadata: name: kibana namespace: elk-stack spec: selector: app: kibana ports: - port: 5601 targetPort: 5601 name: http type: LoadBalancer # or NodePort EOF

89 kubectl get pods -n elk-stack

90 kubectl get services -n elk-stack

91 kubectl get pods -n elk-stack

92 kubectl get pods -n elk-stack -o wide

93 kubectl get pods -n elk-stack -A wide

94 kubectl get pods -n elk-stack -o wide

95 kubectl get services -n elk-stack

96 kubectl get pods -n elk-stack -o wide

97 kubectl get services -n elk-stack

98 kubectl get pods -n elk-stack -o wide

99 # Port forward to test locally

100 kubectl port-forward -n elk-stack svc/elasticsearch 9200:9200 &

101 # Test Elasticsearch

102 curl http://localhost:9200/_cluster/health

103 kubectl get pods -n elk-stack -o wide

104 kubectl describe pod -n elk-stack elasticsearch-5bdbccc746-xnjkq

105 kubectl get pv

106 kubectl get pvc -n elk-stack

107 kubectl describe pvc elasticsearch-pvc -n elk-stack

108 kubectl get nodes

109 kubectl delete pv elasticsearch-pv

110 kubectl get nodes -o wide

111 sudo mkdir -p /opt/elasticsearch/data

112 sudo chmod 777 /opt/elasticsearch/data

115 kubectl patch deployment elasticsearch -n elk-stack -p '{"spec":{"template":{"spec":{"volumes":[{"name":"elasticsearch-data","persistentVolumeClaim":{"claimName":"elasticsearch-pvc"}},{"name":"elasticsearch-config","configMap":{"name":"elasticsearch-config"}}]}}}}'

116 kubectl describe nodes

117 kubectl get nodes -o json | jq '.items[] | {name: .metadata.name, taints: .spec.taints}'

118 kubectl get pods -n elk-stack -w

119 kubectl get nodes -o wide

120 kubectl get pods -n elk-stack -o wide

121 kubectl logs elasticsearch-5bdbccc746-xnjkq

122 kubectl logs elasticsearch-5bdbccc746-xnjkq -n elk-stack

123 kubectl describe pod elasticsearch-5bdbccc746-xnjkq -n elk-stack

124 kubectl delete pv elasticsearch-pv

125 kubectl get nodes -o wide

126 sudo mkdir -p /opt/elasticsearch/data

127 sudo chmod 777 /opt/elasticsearch/data

130 sudo mkdir -p /opt/elasticsearch/data

131 sudo chmod 777 /opt/elasticsearch/data

134 kubectl delete pv elasticsearch-pv

135 sudo mkdir -p /opt/elasticsearch/data

136 sudo chmod 777 /opt/elasticsearch/data

139 sudo kubectl patch deployment elasticsearch -n elk-stack -p '{"spec":{"template":{"spec":{"volumes":[{"name":"elasticsearch-data","persistentVolumeClaim":{"claimName":"elasticsearch-pv"}},{"name":"elasticsearch-config","configMap":{"name":"elasticsearch-config"}}]}}}}'

140 kubectl delete deployment elasticsearch -n elk-stack

141 kubectl delete pvc elasticsearch-pvc -n elk-stack

142 kubectl delete pv elasticsearch-pv

144 kubectl get pods -n elk-stack -w

145 kubectl get nodes -o wide

146 kubectl get pods -n elk-stack -o wide

147 kubectl get svc -o wide

148 kubectl get svc -n elastic-stack -o wide

149*

150 kubectl get pods -n elk-stack -o wide

151 kubectl get nodes -o wide

152 kubectl get pods -n elk-stack -o wide

153 kubectl get svc -n elastic-stack -o wide

154 kubectl get pods -n elk-stack -o wide

155 kubectl get svc -n elk-stack -o wide

156 kubectl port-forward -n elk-stack svc/elasticsearch 9200:9200 &

157 curl http://localhost:9200/_cluster/health

158 kubectl port-forward -n elk-stack svc/elasticsearch 9200:9200

159 pkill -f "port-forward"

160 kubectl port-forward -n elk-stack svc/elasticsearch 9200:9200

161 history

-----------------------------------------------------------------------------------------

kishore@master2:~$ history

1 sudo apt-get install open-vm-tools-desktop -y

2 history

3 free -h

4 sudo swapoff -a

5 cat /proc/swaps

6 sudo sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

7 sudo sed -i '/swap.img/s/^/#/' /etc/fstab

8 cat /etc/fstab | grep swap

9 free -h

10 history

11 free -h

12 sudo hostnamectl set-hostname master2

13 hostname

14 sudo nano /etc/hosts

15 sudo rm /etc/machine-id

16 sudo systemd-machine-id-setup

17 hostnamectl | grep "Machine ID"

18 ifconfig

19 sudo apt install net-tools

20 ifconfig

21 curl http://localhost:9200

22 curl http://localhost:9200/_cluster/health

23 kubectl patch svc elasticsearch -n elk-stack -p '{"spec":{"type":"NodePort"}}'

24 kubectl get svc elasticsearch -n elk-stack

25 curl -s -X POST http://localhost:9200/logs-2025.09.08/_doc -H 'Content-Type: application/json' -d '{

"host": "replica-host-1", "msg": "hello from replica", "ts": "2025-09-08T08:00:00Z"

}'

26 curl -s 'http://localhost:9200/logs-*/_search?q=hello&pretty'

27 kubectl get pod -n -o yaml

28 kubectl get pods -n elk-stack

29 kubectl get pod elasticsearch-67959766b8-9qtjj -n elk-stack -o yaml

30 kubectl exec -it elasticsearch-67959766b8-9qtjj -n elk-stack -c elasticsearch -- sh

31 kubectl get pod elasticsearch-67959766b8-9qtjj -n elk-stack -o yaml | grep containername

32* kubectl describe pod elasticsearch-6795976

33 kubectl describe pod elasticsearch-67959766b8-9qtjj -n elk-stack

34 kubectl describe pod elasticsearch-67959766b8-9qtjj -n elk-stack | grep container

35 kubectl get pod -n -o jsonpath='{.spec.containers[*].name}' | wc -w

36* kubectl get pod -n -o jsonpath='{.spec.containers[*].name}'

37 kubectl get pod elasticsearch-67959766b8-9qtjj -n elk-stack -o jsonpath='{.spec.containers[*].name}'

38 history

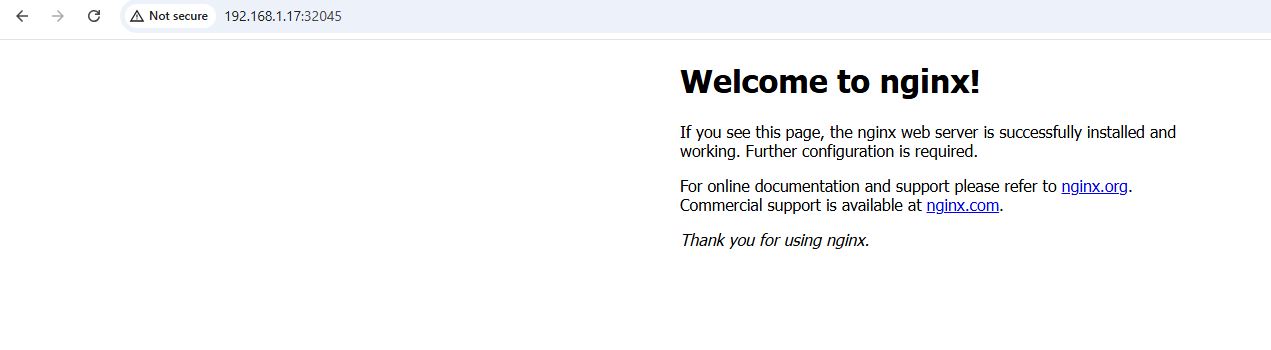

Management Commands

HA Proxy Real Time Logs sudo journalctl -u haproxy -fIn Master Check Who Owns Leader Postion for scheduler && controller-manager kubectl get lease -n kube-system | grep scheduler kubectl get lease -n kube-system | grep controller-manager kubectl describe lease kube-scheduler -n kube-system kubectl describe lease kube-controller-manager -n kube-systemContainer Creation kubectl run nginx123 --image=nginx --port=80 kubectl expose pod nginx123 --type=NodePort --port=80 --target-port=80 --name=nginx-service kubectl get svc -o wide